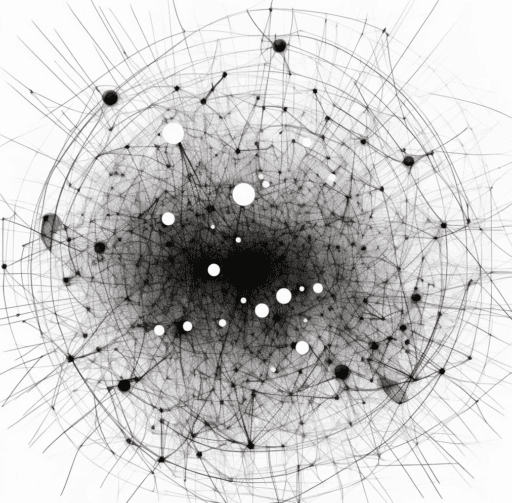

Artificial intelligence (AI) continues its rapid evolution, with new advancements and innovations emerging on a frequent basis. A key enabler of these advancements is the robust infrastructure needed to store, process, and analyze colossal amounts of data. One critical part of this infrastructure is the vector database, a powerful solution for managing unstructured data types including text, audio, images, and videos in numerical form.

Vector databases have gained traction in the AI sphere due to their ability to efficiently manage similarity searches across thousands of columns. They play a crucial role in powering large language models and other advanced AI applications. In this article, we will delve into the fundamentals of vector databases, their significance in AI infrastructure, and their transformative potential in managing and analyzing unstructured data.

Also:

9 Business Use Cases of OpenAI’s ChatGPT

Using LLMs (OpenAI’s ChatGPT) to Streamline Digital Experiences

Why are Vector Databases Integral to AI Infrastructure?

The rise of vector databases is closely tied to the growing importance of embeddings in advanced generative AI applications. Embeddings are high-dimensional vectors that represent unstructured data, such as text, images, and audio, in a continuous numerical space. These vectors are essential for advanced generative AI applications such as natural language processing, computer vision, and speech recognition, where they are used to represent and analyze complex data.

The Role of Embeddings in Generative AI

Embeddings play a vital role in advanced generative AI applications such as natural language processing (NLP), where they are used to represent and analyze complex data. An embedding is a high-dimensional vector that represents unstructured data, such as text, images, and audio, in a continuous numerical space. In the context of NLP, an embedding represents a semantic and syntactic meaning of words or sentences in a vector format that can be fed as input into deep learning models.

An example of an embedding for text could be representing the sentence “I love pizza” as a 300-dimensional vector, where each dimension represents a specific feature or attribute of the sentence. For instance, word count, the presence of certain keywords, or sentiment. The process of generating embeddings for natural language is typically done using pre-trained language models like OpenAI’s GPT or BERT.

The length of an embedding vector is arbitrary and can vary depending on the specific use case and the model used to generate the embeddings. The quality of the embeddings can significantly affect the performance of NLP tasks such as language modeling, sentiment analysis, machine translation, and question-answering systems.

Large language models (LLMs) are one of the most advanced AI applications that heavily rely on embeddings. These models have billions of parameters, and embeddings play a crucial role in training and fine-tuning these models to perform a wide range of NLP tasks.

SQL Databases and Their Limitations in Handling High-Dimensional Embeddings

SQL databases are designed to work with structured data that has a fixed schema and is typically stored in tables with rows and columns. In contrast, embeddings are high-dimensional vectors that represent unstructured data such as text, images, and audio in a continuous numerical space. Embeddings can have hundreds or even thousands of dimensions, making them unsuitable for storage in traditional SQL databases, which are optimized for working with smaller, fixed-dimensional datasets.

Benefits of Vector Databases

Vector databases are natively designed to handle high-dimensional vectors, such as embeddings. They can therefore provide a more scalable and efficient solution for storing, querying, and analyzing large amounts of unstructured data. With their ability to efficiently handle similarity searches across thousands of columns, vector databases have become an essential component of AI infrastructure, powering large language models and other advanced AI applications.

There are several reasons why vector databases are well-suited to handle embeddings:

- Efficient storage: Vector databases are designed to store high-dimensional vectors efficiently, allowing them to handle large quantities of data while using minimal storage space. This is important for embeddings, which can have hundreds or thousands of dimensions.

- High-performance similarity search: Vector databases use specialized algorithms and data structures to perform high-performance similarity searches on embeddings. This allows users to quickly find the closest embeddings to a given query, making them well-suited for tasks such as image or text similarity search.

- Scalability: Vector databases are highly scalable, allowing them to easily handle large datasets. This is important for embeddings, which are often used in large language models and other AI applications that require vast amounts of data.

- Flexibility: Vector databases can handle various data types, including text, images, audio, and video. This makes them well-suited for a wide range of AI applications.

Overall, the specialized design of vector databases makes them well-suited for handling high-dimensional vectors such as embeddings, making them a crucial component of modern AI infrastructure.

Semantic Search as a Way to Create Custom ChatGPT

OpenAI’s approach to embeddings is an unsupervised learning method known as “representation learning.” The model learns to represent the data in a useful way for downstream tasks like natural language processing without being explicitly told what features to extract or how to represent the data. This approach has been highly effective in training LLMs, which can generate human-like text with remarkable accuracy.

However, one of the limitations of OpenAI models is their ability to handle only a limited amount of input data. For example, ChatGPT 3.5 has a token limit of 4096, which means that it cannot search larger databases without additional techniques. This is where embeddings come into play.

Vector databases are becoming increasingly popular for their ability to find meaning in unstructured data, which is a vital feature for advanced AI applications such as semantic search. Semantic search is similar to ChatGPT, but it operates on a custom knowledge base. The knowledge can be anything from customer relationship management (CRM) data to technical manuals and research and development (R&D) information. The data needs to be stored somewhere and support querying at low latency, and vector databases are perfectly suited for this task due to their previously mentioned advantages. The growing popularity of vector databases is, therefore also to be seen as a result of the growing interest of companies in creating custom ChatGPT applications based on their internal knowledge.

Increasing Investments in Vector Database Startups

Given the recent hype around AI, it’s no wonder that companies are investing heavily in vector databases to improve the accuracy and efficiency of their algorithms. This trend is reflected in the recent funding rounds of vector database startups such as Pinecone, Chroma, and Weviate. However, established players in the field, such as Microsoft, also offer solutions that can be used to build AI applications on top of custom knowledge bases. For example, Azure Cognitive Search is a powerful solution that businesses can use to build and deploy AI applications that leverage the capabilities of vector databases. Matchlt is another solution for vector search developed by Google. Despite the challenges posed by new startups, established players like Microsoft remain competitive and continue to offer valuable solutions for businesses seeking to implement vector databases in their AI workflows.

A Close Look at Popular Vector Databases: Pinecone, Chroma, and Weaviate

Finally, let’s take a look at three different dedicated vector databases that are optimized for working with vectors: Pinecone, Chroma, and Weaviate.

Pinecone

Pinecone is a cloud-native vector database designed for high-performance, low-latency, and scalable vector similarity search. It can handle both dense and sparse vectors, making it a versatile choice for a wide range of use cases. Pinecone provides an easy-to-use API that allows users to add, search, and retrieve vectors with just a few lines of code. It also offers hybrid search functionality, which enables users to mix traditional text-based search with vector search.

One of the key advantages of Pinecone is its scalability. It can handle billions of vectors and provides automatic sharding and load balancing to ensure that search requests are distributed evenly across the available resources. Pinecone also offers real-time indexing and search. This means that new vectors are available for search immediately after they are added.

Chroma

Chroma is a simple, lightweight vector search database that can be used to build an in-memory document-vector store. It is built on top of Apache Cassandra and provides an easy-to-use API. It is an excellent choice for users who want a quick and simple solution for vector similarity search. Chroma uses the Hugging Face transformers library to vectorize documents by default, but it can also be configured to use custom vectorization models.

One of the key advantages of Chroma is its simplicity. It can be set up and configured quickly and easily and doesn’t require any special hardware or software. Chroma is also highly customizable, supporting custom vectorization models, custom similarity functions, and more. If you are looking for the Chroma Python library, here it is.

Weaviate

Weaviate is a feature-rich vector database designed for complex data modeling and search use cases. It provides a GraphQL API with support for vector similarity search and a range of other advanced search and filtering features. Weaviate can store and search various data types, including structured data, unstructured data, and images.

One of the key advantages of Weaviate is its flexibility. It can be used to build highly customized search applications with complex data models and search requirements. Weaviate also provides advanced search and filtering features, including geospatial search, range search, and fuzzy search. It also supports data federation, which enables users to search across multiple data sources.

Other Noteworthy Vector Databases

The vector databases above are just some examples. Several other vector databases may be worth a look too:

- Faiss: Developed by Facebook’s AI Research team, Faiss provides efficient similarity search and clustering of dense vectors.

- Hnswlib: An open-source library for Approximate Nearest Neighbor Search, Hnswlib offers excellent speed and accuracy with minimal resource usage.

- Milvus: An open-source vector database designed for AI and analytics, Milvus offers scalable, reliable, and customizable solutions.

- qdrant: Qdrant is a vector similarity search engine with extended filtering capabilities, designed for organizing and searching large-scale vector data.

Additionally, some databases, while not specifically designed for vectors, can handle vectors more efficiently than traditional SQL databases. Examples include Azure Cosmos DB, Elasticsearch, and Redis DB. Examples include Azure Cosmos Db, Elastic Search, and Redis db.

Summary

OpenAI’s advancements may have fueled the initial hype around AI, but the infrastructure demand to support AI applications is now on the rise. Vector databases are increasingly in the spotlight due to their proficiency in managing unstructured data and their efficiency in conducting similarity searches across vast columns of data. With the escalating demand for AI infrastructure, vector databases are anticipated to continue gaining momentum. As businesses and organizations strive to leverage the power of AI, the reliance on vector databases will only grow, making them a cornerstone of future AI infrastructure.

I hope this article was helpful. If you have any remarks or comments, please let me know in the comments.

Sources and Further Reading

- https://en.wikipedia.org/wiki/Distributional%E2%80%93relational_database

- https://www.pinecone.io/

- https://www.trychroma.com/

- https://www.calcalistech.com/ctechnews/article/sjveg7ux2

- https://weaviate.io/

- https://analyticsindiamag.com/why-are-investors-flocking-to-vector-databases/

- ChatGPT helped to revise this article.

- Images generated with Midjourney

[…] applications will continue to be based on relational databases rather than vector databases. that were advertised for their ability to handle unstructured data driving LLM models. He believes relational databases […]