Content-based recommender systems are a popular type of machine learning algorithm that recommends relevant articles based on what a user has previously consumed or liked. This approach aims to identify items with certain keywords, understand what the customer likes, and then identify other items that are similar to items the user has previously consumed or rated. The recommendations are based on the similarity of the items, represented by similarity scores in a vector matrix. The attributes used to describe an item are called “content.” For example, in the case of movie recommendations, content could be the genre, actors, director, year of release, etc. A well-designed content-based recommendation service will suggest movies of the same genre, actors, or keywords. This tutorial will implement a content-based recommendation service for movies using Python and Scikit-learn.

The rest of this tutorial proceeds as follows: After a brief introduction to content-based recommenders, we will work with a database that contains several thousands of IMDB movie titles and create a feature model that uses actors, release year, and a short description for each movie. In this tutorial, you will also learn how to deal with some challenges of building a content-based recommender. For example, we will look at how we can engineer features for content-based model words and reduce the dimensionality of our model. Finally, we use our model to generate some sample predictions.

Note: Another popular type of recommender system that I have covered in a previous article is collaborative filtering.

What is Content-Based Filtering?

The idea behind content-based recommenders is to generate recommendations based on user’s preferences and tastes. These preferences revolve around past user choices, for example, the number of times a user has watched a movie, purchased an item, or clicked on a link.

Content-based filtering uses domain-specific item features to measure the similarity between items. Given the user preferences, the algorithm will recommend items similar to what the user has consumed or liked before. For movie recommendations, this content can be the genre, actors, release year, director, film length, or keywords used to describe the movies. This approach works particularly well for domains with a lot of textual metadata, such as movies and videos, books, or products.

Basic Steps to Building a Content-based Recommender System

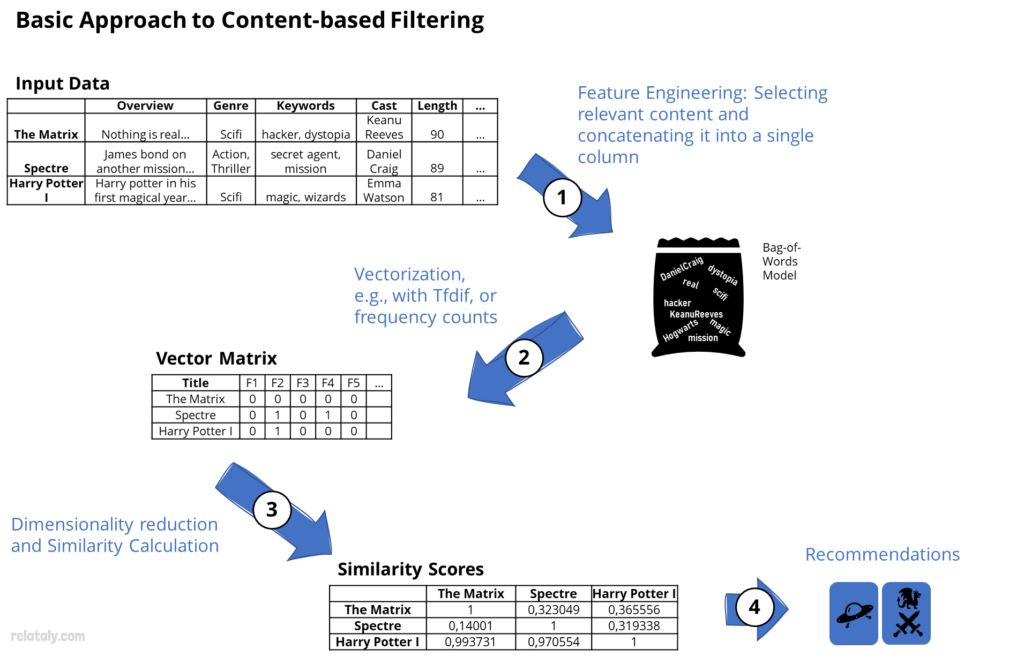

The approach to building a content-based recommender involves four essential steps:

- The first step is to create a so-called ‘bag of words’ model from the input data, which is a list of words used to characterize the items. This step involves selecting useful content for describing and differentiating the items. The more precise the information, the better the recommendations will be.

- The next step is to turn the bag (of words) into a feature vector. Different algorithms can be used for this step, for example, the Tfdif vectorizer or the count vectorizer. The result is a vector matrix with items as records and features as columns. This step often also includes applying techniques for dimensionality reduction.

- The idea of content-based recommendations is based on measuring item similarity. Similarity scores are assigned through pairwise comparison. Here again, we can choose between different measures, e.g., the dot product or cosine similarity.

- Once you have the similarity scores, you can return the most similar items by sorting the data by similarity scores. Given user preferences (single or multiple items a user consumed or liked), the algorithm will then recommend the most similar items.

Similarity Scoring

The quality of the content-based recommendations is significantly influenced by how well the algorithm succeeds in measuring the similarity of the items. There are different techniques to calculate similarity, including Cosine Similarity, Pearson Similarity, Dot Product, and Euclidian Distance. They have in common that they use numerical characteristics of the text to calculate the distance between text vectors in an n-dimensional vector space.

It is worth denoting that these techniques can only measure word-level similarity. This means the algorithms compare the word of the item for word without considering the semantic meaning of the sentences. In some instances, this can lead to errors. For example, how similar are “now that they were sitting on a bank, he noticed she stole his heart, and he was in love” and “They are gangsters who love to steal from a large bank”? By just looking at the words, one may appear similar because the words have a good overlap.

Pros and Cons of Content-based Filtering

Like most machine learning algorithms, content-based recommenders have their strength and weaknesses.

Advantages

- Content-based filtering is good at capturing a user’s specific interests and will recommend more of the same (for example, genre, actors, directors, etc.). It will also recommend niche items if they match the user preferences, even if these items draw little attention.

- Another advantage is that the model can generate recommendations for a specific user without the knowledge of other users. This is particularly helpful if you want to generate predictions for many users.

Disadvantages

- On the other hand, there are also a couple of downsides. The feature representation of the items has to be done manually to a certain extent, and the prediction quality strongly depends on whether items are described in detail. Therefore, content-based filtering requires a lot of expertise.

- Since recommendations are based on the user’s previous interests. However, the recommendations are unlikely to go beyond that and expand to areas (e.g., genres) that are still unknown to the user. Content-based models thus tend to develop some tunnel vision, so that the model recommends more and more of the same.

Implementing a Content-based Movie Recommender in Python

In the following, we will implement a content-based movie recommender using Python and Scikit-learn. We will carry out all steps necessary to create a content-based recommender. The data comes from an IMDB dataset containing more than 40k films between 1996 and 2018. Based on the data, we define the features we want to use for recommending the movies. These features include the genre, director, main actors, plot keywords, or other metadata associated with the movies. Then we preprocess the data to extract these features and create a feature matrix. The feature matrix becomes the foundation for a similarity matrix that measures the similarity between the items based on their feature vectors. Finally, we use the similarity matrix to generate recommendations for a given item.

By the end of this Python tutorial, you will have learned how to implement a content-based recommendation system for movies using Python and Scikit-learn. This knowledge can be applied to other types of recommendations, such as articles, products, or songs.

The code is available on the GitHub repository.

Prerequisites

Before you start with the coding part, ensure you have set up your Python 3 environment and required packages. If you don’t have an environment, consider the Anaconda Python environment. Follow this tutorial to set it up.

Also, make sure you install all required packages. In this tutorial, we will be working with the following standard packages:

In addition, we will be using Seaborn for visualization and the natural language processing library nltk.

You can install these packages by using one of the following commands:

- pip install <package name>

- conda install <package name> (if you are using the anaconda packet manager)

About the IMDB Movies Dataset

We will train our movie recommender on a popular Movies Dataset (you can download it from grouplens.org). The MovieLens recommendation service collected the Dataset from 610 users between 1996 and 2018. Unpack the data into the working folder of your project.

The full Dataset contains metadata on over 45,000 movies and 26 million ratings from over 270,000 users. The Dataset contains the following files (Source of the data description: Kaggle.com):

- movies_metadata.csv: The main Movies Metadata file contains information on 45,000 movies featured in the Full MovieLens Dataset. Features include posters, backdrops, budget, revenue, release dates, languages, production countries, and companies.

- ratings_small.csv: The subset of 100,000 ratings from 700 users on 9,000 movies. Each line corresponds to a 5-star movie rating with half-star increments (0.5 – 5.0 stars).

- keywords.csv: Contains the movie plot keywords for our MovieLens movies. Available in the form of a stringified JSON Object.

- credits.csv: Consists of Cast and Crew Information for all our films. Available in the form of a stringified JSON Object.

Several other files are included that we won’t use, incl. ratings_small, links_small, and links.

You can download it here or from Kaggle.

Step #1: Load the Data

Our goal is to create a content-based recommender system for movie recommendations. In this case, the content will be meta information on movies, such as genre, actors, the description.

We begin by making imports and loading the data from three files:

- movies_metadata.csv

- credits.csv

- keywords.csv

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

sns.set_style('white', { 'axes.spines.right': False, 'axes.spines.top': False})

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

from sklearn.decomposition import TruncatedSVD

from nltk.corpus import stopwords

# the IMDB movies data is available on Kaggle.com

# https://www.kaggle.com/datasets/rounakbanik/the-movies-dataset

# in case you have placed the files outside of your working directory, you need to specify the path

path = 'data/movie_recommendations/'

# load the movie metadata

df_meta=pd.read_csv(path + 'movies_metadata.csv', low_memory=False, encoding='UTF-8')

# some records have invalid ids, which is why we remove them

df_meta = df_meta.drop([19730, 29503, 35587])

# convert the id to type int and set id as index

df_meta = df_meta.set_index(df_meta['id'].str.strip().replace(',','').astype(int))

pd.set_option('display.max_colwidth', 20)

df_meta.head(2)

adult belongs_to_collection budget genres homepage id imdb_id original_language original_title overview ... release_date revenue runtime spoken_languages status tagline title video vote_average vote_count

id

862 False {'id': 10194, 'n... 30000000 [{'id': 16, 'nam... http://toystory.... 862 tt0114709 en Toy Story Led by Woody, An... ... 1995-10-30 373554033.0 81.0 [{'iso_639_1': '... Released NaN Toy Story False 7.7 5415.0

8844 False NaN 65000000 [{'id': 12, 'nam... NaN 8844 tt0113497 en Jumanji When siblings Ju... ... 1995-12-15 262797249.0 104.0 [{'iso_639_1': '... Released Roll the dice an... Jumanji False 6.9 2413.0

After we have loaded credits and keywords, we will combine the data into a single dataframe. Now we have various input fields available. However, we will only use keywords, cast, year of release, genres, and overview. If you like, you can enhance the data with additional inputs, for example, budget, running time, or film language.

Once we have gathered our data in a single dataframe, we print out the first rows to gain an overview of the data.

# load the movie credits

df_credits = pd.read_csv(path + 'credits.csv', encoding='UTF-8')

df_credits = df_credits.set_index('id')

# load the movie keywords

df_keywords=pd.read_csv(path + 'keywords.csv', low_memory=False, encoding='UTF-8')

df_keywords = df_keywords.set_index('id')

# merge everything into a single dataframe

df_k_c = df_keywords.merge(df_credits, left_index=True, right_on='id')

df = df_k_c.merge(df_meta[['release_date','genres','overview','title']], left_index=True, right_on='id')

df.head(3)

keywords cast crew release_date genres overview title

id

862 [{'id': 931, 'na... [{'cast_id': 14,... [{'credit_id': '... 1995-10-30 [{'id': 16, 'nam... Led by Woody, An... Toy Story

8844 [{'id': 10090, '... [{'cast_id': 1, ... [{'credit_id': '... 1995-12-15 [{'id': 12, 'nam... When siblings Ju... Jumanji

15602 [{'id': 1495, 'n... [{'cast_id': 2, ... [{'credit_id': '... 1995-12-22 [{'id': 10749, '... A family wedding... Grumpier Old Men

We can see cast, crew, and genres have a dictionary-like structure. To create a cosine similarity matrix, we need to extract the keywords from these columns and gather them in a single column. This is what we will do in the next step.

Step #2: Feature Engineering and Data Cleaning

A problem with modeling text is that machine learning algorithms have difficulty processing text directly. An essential step in creating content-based recommenders is bringing the text into a machine-readable form. This is what we call feature engineering.

2.1 Creating a Bag-of-Words Model

We begin with feature engineering and creating the bag of words. As mentioned, a bag of words is a list of words relevant to describe items in a dataset, such as films, and differentiate them. Creating a bag of words removes stopwords but preserves multiplicity so that words can occur multiple times in the concatenated text. Later, each word can be used as a feature in calculating cosine similarities.

The input for a bag of words does not necessarily come from a single input column. We will use keywords, genres, cast, and overview and merge them into a new single column that we call tags. Make sure to capture the text field’s nature. We will keep names and surnames together and not split them, as we will do with the words from the overview column. The result of this process is our bag.

In addition, we add the movie title and a new index (id), which will later ease working with the similarity matrix. Finally, we print the first rows of our feature dataframe.

# create an empty DataFrame

df_movies = pd.DataFrame()

# extract the keywords

df_movies['keywords'] = df['keywords'].apply(lambda x: [i['name'] for i in eval(x)])

df_movies['keywords'] = df_movies['keywords'].apply(lambda x: ' '.join([i.replace(" ", "") for i in x]))

# extract the overview

df_movies['overview'] = df['overview'].fillna('')

# extract the release year

df_movies['release_date'] = pd.to_datetime(df['release_date'], errors='coerce').apply(lambda x: str(x).split('-')[0] if x != np.nan else np.nan)

# extract the actors

df_movies['cast'] = df['cast'].apply(lambda x: [i['name'] for i in eval(x)])

df_movies['cast'] = df_movies['cast'].apply(lambda x: ' '.join([i.replace(" ", "") for i in x]))

# extract genres

df_movies['genres'] = df['genres'].apply(lambda x: [i['name'] for i in eval(x)])

df_movies['genres'] = df_movies['genres'].apply(lambda x: ' '.join([i.replace(" ", "") for i in x]))

# add the title

df_movies['title'] = df['title']

# merge fields into a tag field

df_movies['tags'] = df_movies['keywords'] + df_movies['cast']+' '+df_movies['genres']+' '+df_movies['release_date']

# drop records with empty tags and dublicates

df_movies.drop(df_movies[df_movies['tags']==''].index, inplace=True)

df_movies.drop_duplicates(inplace=True)

# add a fresh index to the dataframe, which we will later use when refering to items in a vector matrix

df_movies['new_id'] = range(0, len(df_movies))

# Reduce the data to relevant columns

df_movies = df_movies[['new_id', 'title', 'tags']]

# display the data

pd.set_option('display.max_colwidth', 500)

pd.set_option('display.expand_frame_repr', False)

print(df_movies.shape)

df_movies.head(5)

new_id title tags id 862 0 Toy Story jealousy toy boy friendship friends rivalry boynextdoor newtoy toycomestolifeTomHanks TimAllen DonRickles JimVarney WallaceShawn JohnRatzenberger AnniePotts JohnMorris ErikvonDetten LaurieMetcalf R.LeeErmey SarahFreeman PennJillette Animation Comedy Family 1995 8844 1 Jumanji boardgame disappearance basedonchildren'sbook newhome recluse giantinsectRobinWilliams JonathanHyde KirstenDunst BradleyPierce BonnieHunt BebeNeuwirth DavidAlanGrier PatriciaClarkson AdamHann-Byrd LauraBellBundy JamesHandy GillianBarber BrandonObray CyrusThiedeke GaryJosephThorup LeonardZola LloydBerry MalcolmStewart AnnabelKershaw DarrylHenriques RobynDriscoll PeterBryant SarahGilson FloricaVlad JuneLion BrendaLockmuller Adventure Fantasy Family 1995 15602 2 Grumpier Old Men fishing bestfriend duringcreditsstinger oldmenWalterMatthau JackLemmon Ann-Margret SophiaLoren DarylHannah BurgessMeredith KevinPollak Romance Comedy 1995 31357 3 Waiting to Exhale basedonnovel interracialrelationship singlemother divorce chickflickWhitneyHouston AngelaBassett LorettaDevine LelaRochon GregoryHines DennisHaysbert MichaelBeach MykeltiWilliamson LamontJohnson WesleySnipes Comedy Drama Romance 1995 11862 4 Father of the Bride Part II baby midlifecrisis confidence aging daughter motherdaughterrelationship pregnancy contraception gynecologistSteveMartin DianeKeaton MartinShort KimberlyWilliams-Paisley GeorgeNewbern KieranCulkin BDWong PeterMichaelGoetz KateMcGregor-Stewart JaneAdams EugeneLevy LoriAlan Comedy 1995

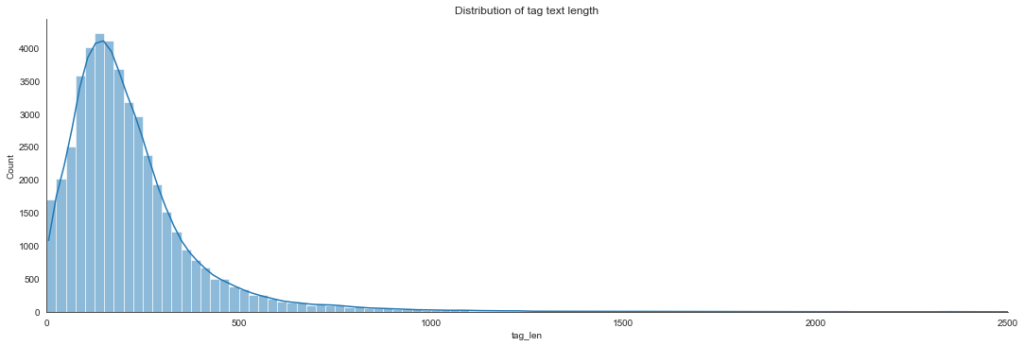

2.2 Visualizing Text Length

We can use a bar chart to illustrate each movie’s word bag length. This gives us an idea of how detailed the movie descriptions are. Items with short descriptions have, in principle, a lower probability of being recommended later. Recommenders produce better results if the length of the descriptions is somewhat balanced.

# add the tag length to the movies df

df_movies['tag_len'] = df_movies['tags'].apply(lambda x: len(x))

# illustrate the tag text length

sns.displot(data=df_movies.dropna(), bins=list(range(0, 2000, 25)), height=5, x='tag_len', aspect=3, kde=True)

plt.title('Distribution of tag text length')

plt.xlim([0, 2500])

Step #3: Vectorization using TfidfVectorizer

The next step is to create a vector matrix from the Bag of Words model. Each column from the matrix represents a word feature. This step is the basis for determining the similarity of the movies afterward. Before the vectorization, we will remove stop words from the text (e.g., and, it, that, or, why, where, etc.). In addition, I limited the number of features in the matrix to 5000 to reduce training time.

A simple vectorization approach is to determine the word frequency for each movie using a count vectorizer. However, a frequently mentioned disadvantage of this approach is that it does not consider how often a word occurs. For example, some words may appear in almost all items. On the other hand, some words may be prevalent in a few items but are rare in general. So we can argue that observing rare words in an item is more informative than observing common words. Instead of a count vectorizer, we will use a more practical approach called TfidfVectorizer from the scikit-learn package.

Tfidf stands for term frequency-inverse document frequency. Compared to a count vectorizer, the tf-idf vectorizer considers the overall word frequencies and weights the general importance of the words when spanning the vectors. This way, tf-idf can determine which words are more important than others, reducing the model’s complexity and improving performance. This medium article explains the math behind tf-idf vectorization in more detail.

# set a custom stop list from nltk

stop = list(stopwords.words('english'))

# create the tfid vectorizer, alternatively you can also use countVectorizer

tfidf = TfidfVectorizer(max_features=5000, analyzer = 'word', stop_words=set(stop))

vectorized_data = tfidf.fit_transform(df_movies['tags'])

count_matrix = pd.DataFrame(vectorized_data.toarray(), index=df_movies['tags'].index.tolist())

print(count_matrix)

0 1 2 3 4 5 6 7 8 9 ... 4990 4991 4992 4993 4994 4995 4996 4997 4998 4999 862 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 8844 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 15602 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 31357 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 11862 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... 439050 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 111109 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 67758 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 227506 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 461257 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 ... 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 [45432 rows x 5000 columns]

The vectorization process results in a feature matrix in which each feature is a word from the text bag of words.

We can display features with the get_feature_names_out function from the tfidf vectorizer.

# print feature names print(tfidf.get_feature_names_out()[940:990])

['climbing' 'clinteastwood' 'clinthoward' 'clive' 'cliveowen' 'cliverevill' 'cliverussell' 'clone' 'clorisleachman' 'cloviscornillac' 'clown' 'clugulager' 'clydekusatsu' 'co' 'coach' 'cobb' 'cocaine' 'code' 'coffin' 'cohen' 'coldwar' 'cole' 'colehauser' 'coleman' 'colinfarrell' 'colinfirth' 'colinhanks' 'colinkenny' 'colinsalmon' 'colleencamp' 'college' 'colmfeore' 'colmmeaney' 'coma' 'combat' 'comedian' 'comedy' 'comicbook' 'comingofage' 'comingout' 'common' 'communism' 'communist' 'company' 'competition' 'composer' 'computer' 'con' 'concentrationcamp' 'concert']

As you can see, features are specific words,

Step #4 Dimensionality Reduction and Calculate Consine Similarities

In the previous section, we created a vector matrix that contains movies and features. This matrix is the foundation for calculating similarity scores for all movies. Before we assign feature scores, we will apply dimensionality reduction.

4.1 Dimensionality Reduction using SVD

The matrix spans a high-dimensional vector space with more than 5000 feature columns. Do we need all of these features? The answer is most likely not. There are likely a lot of words in the matrix that only occur once or twice. On the other hand, words may occur in almost all movies. How can we deal with this issue?

The reason for this is that we have a very dimensional vector space. By reducing this space to fewer, more essential features, we can save some time training our recommender model. We will use TruncatedSVD from the scikit-learn package, a popular algorithm for dimensionality reduction. The algorithm smoothens the matrix and approximates it to a lower dimensional space, thereby reducing noise and model complexity.

This way, we will reduce the vector space from 5000 to 3000 features.

# reduce dimensionality for improved performance svd = TruncatedSVD(n_components=3000) reduced_data = svd.fit_transform(count_matrix)

4.2 Calculate Text Similarity Scores for all Movies

Now that we have reduced the complexity of our vector matrix, we can calculate the similarity scores for all movies. In this process, we assign a similarity score to all item pairs that measure content closeness according to the position of the items in the vector space.

We use the cosine function to calculate the similarity value of the movies. The cosine similarity is a mathematical calculation to determine the mathematical similarity of two vectors. In our case, the vectors are the movie descriptions. The cosine similarity function uses these feature vectors to compare each movie to every other and assigns them a similarity value.

- A similarity value of -1 means that two feature vectors are correlated, and the movies are entirely different.

- A value of 1 means that the two movies are identical.

- A value of 0 is between and means f an average match of the feature vectors.

The cosine similarity function will calculate pairwise similarities for all movies in our vector matrix. We can determine the number of pairwise comparisons with the formula k²/2, whereby k is the number of items in the vector matrix. In our case, we have a k of 45000 movies. This means the cosine similarity function must calculate about 1 billion similarity scores. So don’t worry if the process takes some time to complete.

# compute the cosine similarity matrix similarity = cosine_similarity(reduced_data) similarity

array([[ 1.00000000e+00, 9.75542082e-02, 6.00755620e-02, ...,

-3.03965235e-04, 0.00000000e+00, 5.81243547e-05],

[ 9.75542082e-02, 1.00000000e+00, 5.92929339e-02, ...,

-2.97565163e-03, 0.00000000e+00, 4.57945869e-05],

[ 6.00755620e-02, 5.92929339e-02, 1.00000000e+00, ...,

9.40459504e-03, 0.00000000e+00, -2.22415551e-04],

...,

[-3.03965235e-04, -2.97565163e-03, 9.40459504e-03, ...,

1.00000000e+00, 0.00000000e+00, -2.60823346e-04],

[ 0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00],

[ 5.81243547e-05, 4.57945869e-05, -2.22415551e-04, ...,

-2.60823346e-04, 0.00000000e+00, 1.00000000e+00]])

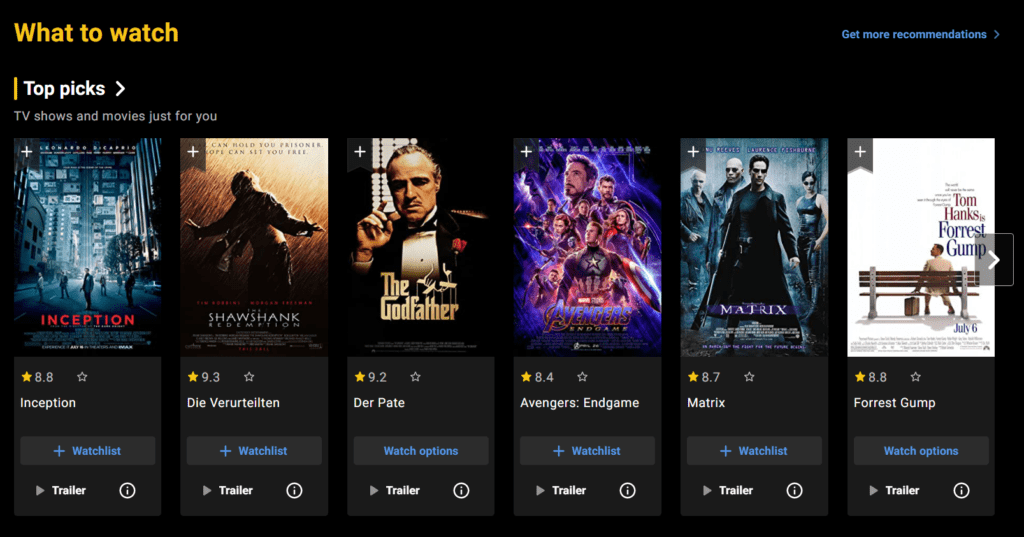

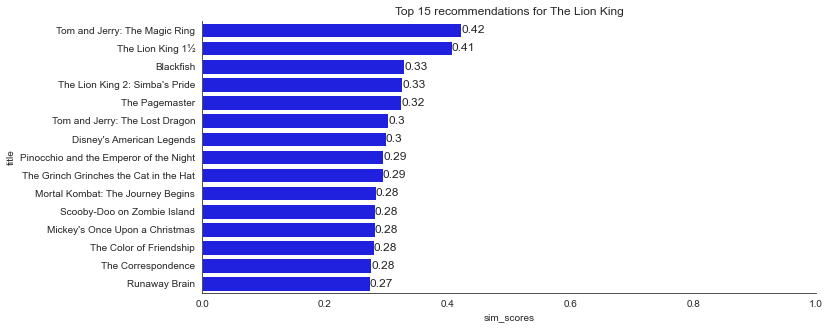

Step #5: Generate Content-based Movie Recommendations

Once you have created the similarity matrix, it’s time to generate some recommendations. We begin by generating recommendations based on a single movie. In the cosine similarity matrix, the most similar movies have the highest similarity scores. Once we have the film with the highest scores, we can visualize the results in a bar chart that shows the cosine similarity scores.

The example below displays the results of the movie “The Matrix.” Oh, how I love this movie 🙂

# create a function that takes in movie title as input and returns a list of the most similar movies

def get_recommendations(title, n, cosine_sim=similarity):

# get the index of the movie that matches the title

movie_index = df_movies[df_movies.title==title].new_id.values[0]

print(movie_index, title)

# get the pairwsie similarity scores of all movies with that movie and sort the movies based on the similarity scores

sim_scores_all = sorted(list(enumerate(cosine_sim[movie_index])), key=lambda x: x[1], reverse=True)

# checks if recommendations are limited

if n > 0:

sim_scores_all = sim_scores_all[1:n+1]

# get the movie indices of the top similar movies

movie_indices = [i[0] for i in sim_scores_all]

scores = [i[1] for i in sim_scores_all]

# return the top n most similar movies from the movies df

top_titles_df = pd.DataFrame(df_movies.iloc[movie_indices]['title'])

top_titles_df['sim_scores'] = scores

top_titles_df['ranking'] = range(1, len(top_titles_df) + 1)

return top_titles_df, sim_scores_all

# generate a list of recommendations for a specific movie title

movie_name = 'The Matrix'

number_of_recommendations = 15

top_titles_df, _ = get_recommendations(movie_name, number_of_recommendations)

# visualize the results

def show_results(movie_name, top_titles_df):

fix, ax = plt.subplots(figsize=(11, 5))

sns.barplot(data=top_titles_df, y='title', x= 'sim_scores', color='blue')

plt.xlim((0,1))

plt.title(f'Top 15 recommendations for {movie_name}')

pct_values = ['{:.2}'.format(elm) for elm in list(top_titles_df['sim_scores'])]

ax.bar_label(container=ax.containers[0], labels=pct_values, size=12)

show_results(movie_name, top_titles_df)

Example for the movies “Spectre” and “The Lion King”

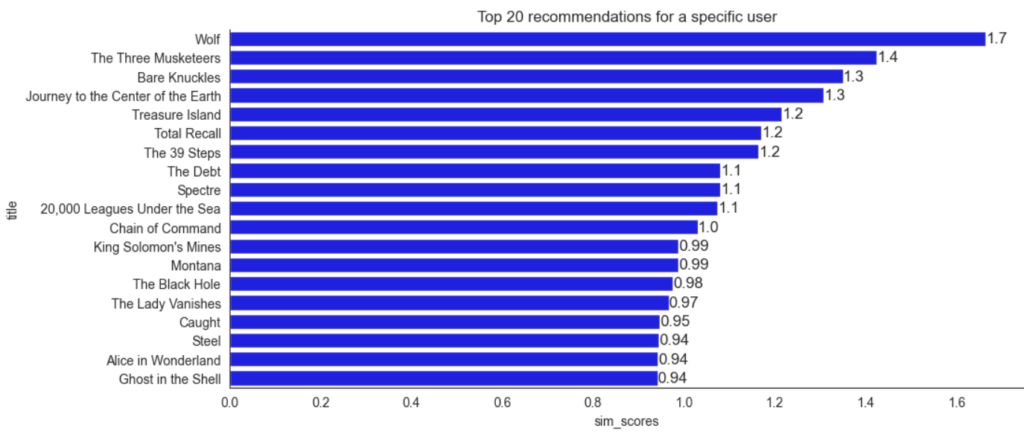

Step #6: Generate Content-based Movie Recommendations

But what if you want to generate recommendations for specific users that have seen several movies? For this, we can aggregate the similarity scores for all films the user has seen. This way, we create a new dataframe that sums up similarity scores. To return the top-recommended movies, we can sort this dataframe by similarity scores and replace the top elements.

# list of movies a user has seen

movie_list = ['The Lion King', 'Seven', 'RoboCop 3', 'Blade Runner', 'Quantum of Solace', 'Casino Royale', 'Skyfall']

# create a copy of the movie dataframe and add a column in which we aggregated the scores

user_scores = pd.DataFrame(df_movies['title'])

user_scores['sim_scores'] = 0.0

# top number of scores to be considered for each movie

number_of_recommendations = 10000

for movie_name in movie_list:

top_titles_df, _ = get_recommendations(movie_name, number_of_recommendations)

# aggregate the scores

user_scores = pd.concat([user_scores, top_titles_df[['title', 'sim_scores']]]).groupby(['title'], as_index=False).sum({'sim_scores'})

# sort and print the aggregated scores

user_scores.sort_values(by='sim_scores', ascending=False)[1:20]

Summary

In this tutorial, you have learned to implement a simple content-based recommender system for movie recommendations in Python. We have used several movie-specific details to calculate a similarity matrix for all movies in our dataset. Finally, we have used this model to generate recommendations for two cases:

- Films that are similar to a specific movie

- Films that are recommended based on the watchlist of a particular user.

A downside of content-based recommenders is that you cannot test their performance unless you know how users perceived the recommendations. This is because content-based recommenders can only determine which items in a dataset are similar. To understand how well the suggestions work, you must include additional data about actual user preferences.

More advanced recommenders will combine content-based recommendations with user-item interactions (e.g., collaborative filtering). Such models are called hybrid recommenders, but this is something for another article.

Sources and Further Reading

Below are some resources for further reading on recommender systems and content-based models.

Books

- Charu C. Aggarwal (2016) Recommender Systems: The Textbook

- Kin Falk (2019) Practical Recommender Systems

- Andriy Burkov (2020) Machine Learning Engineering

- Oliver Theobald (2020) Machine Learning For Absolute Beginners: A Plain English Introduction

- Aurélien Géron (2019) Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems

- David Forsyth (2019) Applied Machine Learning Springer

The links above to Amazon are affiliate links. By buying through these links, you support the Relataly.com blog and help to cover the hosting costs. Using the links does not affect the price.

Articles